Dolby Vision, HDR10, and HLG are both criteria for HDR. Therefore, before understanding the differences among the three criteria, we need to know about HDR first.

What is HDR?

HDR refers to the High dynamic range. Simply put, it is a technique that increases the brightness and contrast of an image.

It brightens every dark detail, darkens darker areas, and enriches more detailed colors, giving movies, and pictures, a great look. It brings you closer to the visual experience in the real environment when watching a film, and that’s why HDR exists.

What Is Dolby Vision?

Dolby Vision is also a proprietary technique by Dolby, which can improve image quality by adding greater depth, incredible contrast, and more colors than you dreamed possible to movies, TV shows, and games.

It can unlock the full potential of HDR technology by dynamically optimizing the image quality based on your service, device, and platform to deliver mesmerizing visuals every time.

The darkest brightness range perceived by the human eye is 0.001 nits at its and 20,000 nits at its brightest, while the dynamic range of current Blu-ray video has been greatly reduced through the process of shooting>post>mastering>transmission>display, with a darkest of 0.117 nits and a brightest of a mere 100 nits.

What Dolby Vision is trying to do is to recreate the end-to-end ecosystem of Shoot>Post>Master>Transport>Display to preserve and reproduce the brighter and richer color pixels in the video, so that the human eye can feel the improved image when viewing the video on TV.

What Is HDR10?

HDR10 is also called HDR10 Media Profile. It is an open HDR video standard announced by CTA (Consumer Technology Association), a US association headquartered in Virginia, US. HDR10 is technically limited to a maximum of 1,000 nits (brightness unit) and 10 bits of color depth. As an open standard for HDR, it is widespread in the home theater areas, including TV and projectors. Similar to HDR10, HDR10+ is also an HDR standard and it is royalty-free.

What Is HLG?

HLG stands for Hybrid Log Gamma. Just like the standards above we have talked about, it is also a standard of HDR. HLG is a standard jointly developed by the BBC and NHK of Japan. It combines SDR and HDR into one and can play successfully on an SDR monitor.

Compared with HDR10, it can adapt to display devices of lower grade, with a minimum support of 500 nits and a maximum support of 2000nit in terms of brightness. Sony has been using this technology for a long time, and HLG is also licensed free of charge, with high market acceptance and low cost.

Dolby Vision vs HDR 10 vs HLG

After introducing the three standards in the last paragraph, some projector users still wonder what are the differences between the three standards.

| Dolby Vision | HDR10 | HLG | |

| Licensing | Proprietary | Open | Royalty-free |

| Availability | Less Widespread | Widespread | Widespread |

| Metadata | Dynamic | Static | Static |

| Image Quality | Best | Good | Good |

| Peak Brightness | 10,000 nits | 4,000 nits | 4,000 nits |

As we can see in the table below, Dolby Vision is a proprietary standard, HDR10 is an open standard; HLG is royalty-free. As Dolby Vision is proprietary, it is less seen in the home theater area compared with the other two technologies.

Regarding metadata, Dolby Vision is the only one that has dynamic metadata while the other two formats are static metadata. You can know the differences between static metadata and dynamic metadata in the following part.

As for image quality, Dolby Vision has the best overall image performance.

Regarding the peak brightness, Dolby Vision has the highest brightness of 10,000 nits.

FAQs

What Is Metadata?

Metadata refers to the additional image information found within content. This includes the color and brightness information used in the HDR image mastering process. Functionally, HDR metadata is used to help the display device display the picture content in a better way. In terms of content and scope, it can be divided into static metadata and dynamic metadata.

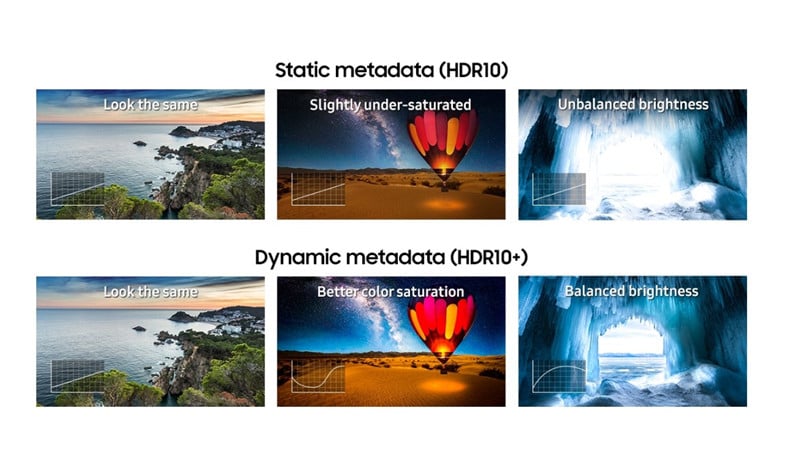

What Are the Differences Between Static Metadata and Dynamic Metadata?

Static metadata means that the same metadata used in a video to control the color and detail of each frame, and does not change between frames.

Dynamic metadata allows specific metadata for each frame or each scene switch. The corresponding standard for dynamic metadata is SMPTE ST 2094 of 2016.

HDR can be classified by static metadata and dynamic metadata. Dynamic HDR technology means applying metadata to each scene and then delivering a more optimized picture quality compared to that static HDR technology.

What Are The Display Requirements for HDR?

Here are the display requirements for HDR. The built-in display should have a resolution of 1080p or more, and a recommended max brightness of 300 nits or more.

The HDR display or TV must support HDR10, DisplayPort 1.4, HDMI 2.0 or higher, USB-C, or Thunderbolt. To find the specifications for a specific PC or external display, visit the device manufacturer’s website.

You may be interested in HDMI 2.0 vs HDMI 2.1.

HDR10 vs HDR 10+

Common Points

- Both HDR standards.

- HDR10 is an open standard for HDR.

- HDR10+ is a Royalty-free standard.

- The same color depth of 10bit.

Differences

- Popularity: HDR10 is more widespread.

- Peak Brightness: HDR10 mastered 400 to 4,000 cd/m² HDR10+ mastered from 1,000 to 4,000 cd/m².

- Metadata: HDR10 adopts static metadata while HDR10+ has Dynamic metadata.

- Tone Mapping: HDR10 features the same brightness and tone mapping for the entirety of the content. HDR10+ Adjusts the brightness and tone mapping per scene.

How to Achieve Dolby Vision?

Firstly, you need to get a projector or other devices with Dolby Vision, for example, Hisense PX1-PRO, Formovie Theater, and Xiaomi Laser Cinema 2.

Secondly, you need to get content graded in Dolby Vision.

Then, you can enjoy Dolby Vision via your home theater.

Related Posts